Gait recognition has emerged as a promising biometric technology due to its ability to operate at a distance without subject cooperation. While pose-based methods offer advantages over appearance-based approaches in robustness and interpretability, their performance has been limited by the sparse keypoint representations of current pose estimation frameworks.

We identify two critical limitations: (1) incomplete motion representation due to insufficient keypoints for dynamic body parts, and (2) lack of shape information from minimal skeleton points. This paper presents DPGait, a novel framework that addresses these challenges through innovations in both upstream processing and downstream modeling. First, we enhance pose estimation by extending the standard COCO keypoint format with additional motion-sensitive points and shape-descriptive keypoints inspired by human mesh estimation. Second, we propose a divideand-conquer modeling strategy that processes dense keypoints through group convolution with cross-group attention, coupled with multi-granularity supervision for improved training.

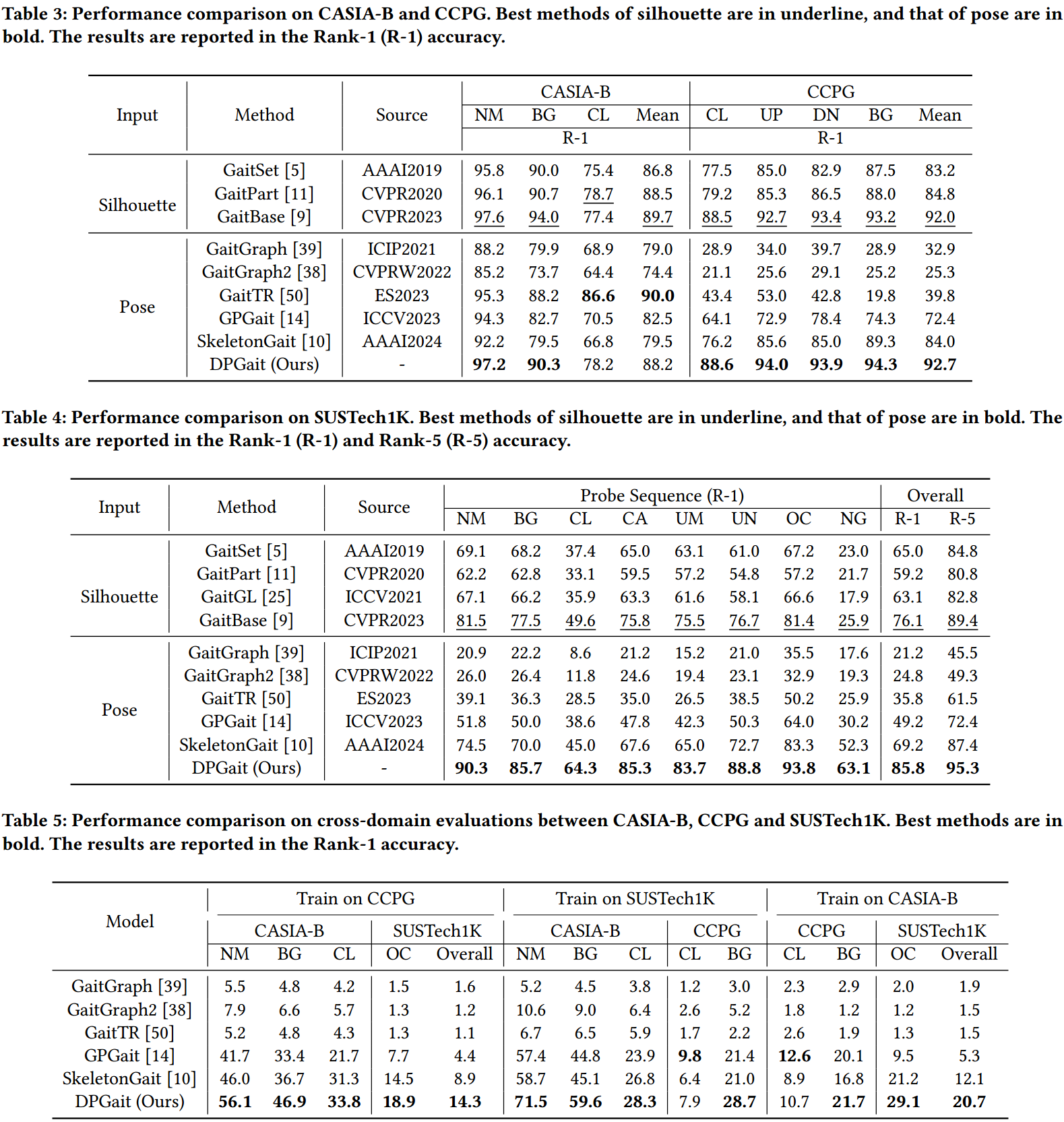

Our comprehensive experiments demonstrate state-of-the-art performance in pose-based gait recognition, achieving 85.8% rank-1 accuracy on SUSTech1K—surpassing leading silhouette-based methods for the first time. The results validate that dense pose representation combined with our novel modeling approach significantly advances the field of gait recognition.

We would like to express our gratitude to the contributors of the following frameworks and tools, which were instrumental in our experiments and framework development:

@article{peng2025dpgait,

author = {Wenpeng Lang, Saihui Hou and Yongzhen Huang},

title = {Beyond Sparse Keypoints: Dense Pose Modeling for Robust Gait Recognition},

journal = {ACM MM},

year = {2025},

}